What if the Metaverse isn't all Marvel character skins, Ariana Grande concerts, NFT art galleries or land auctions?

What if, instead, a large chunk of the Metaverse primarily focuses on extensions of 'reality' - a place for your book club to meet inside a re-creation of the novel's setting, your own personal gallery of cute photos of your cat (rendered as 3D holograms), or a place to browse for furniture for your home?

What if one of the largest drivers of traffic to the Metaverse is Facebook? What if people arrive in the world you've created within the Metaverse because of an ad on Facebook or Instagram, or because someone "liked" your build or shared a 3D movie of a concert you held - and it went viral on Instagram?

How will experiences be created when there's an expectation that someone will first engage with some little "nugget" of content on Instagram? How will those experiences be influenced by someone's arrival from a social stream instead of a gaming portal?

These scenarios are possible when we start to think about what drives Facebook. That while VR headsets or AR glasses might make a lot of money, they will never have the reach or value of 2.89 billion user accounts.

Because if we think of how Facebook would benefit from an open Metaverse, we would realize that the main benefits will be related (at least in the next decade) to how they extend the functionality of the Facebook app and Instagram.

As such, we may see a far more 'real' experience of spatial worlds than we generally imagine. They will extend your Facebook Groups, be an add-on to your Facebook page, or be the result of adding a 3D store when you run a Facebook ad.

And while we may still attend Ariana Grande concerts in the Fortnite corner of the Metaverse or hang out at the Bored Apes Yacht Club, Facebook might also manage to take a slice of value out of that time by getting you there in the first place.

How We Get To The Metaverse

Consider this: you're browsing Instagram and see an ad for a pair of shoes. Even with today's technology (whether in Snapchat, IG or elsewhere) you can view these shoes on your physical feet because of the power of your phone's camera and the supporting AI.

Have a look at this demo (click through to see video on Twitter):

Pretty cool! Digital and physical worlds blurring together.

But what happens if (5 years from now) you add a button that says: "visit store in 3D".

- If you've been 'seeing' these virtual shoes through your AR glasses, the store materializes in the room around you

- If you're on your phone you can either view the virtual showroom on your phone or bounce it over to your laptop

Or, if you've been shoe shopping in VR, that same store is also accessible. Just hang a left after the dance club while wearing your virtual reality glasses.

Regardless of how you get there, it has been a short hop from some micro piece of content into a 3D experience.

Linking Spatial Experiences

Now, let's say that store has a teleport button. Or maybe you can just walk down the virtual street. And you can visit a sock store owned by another brand.

You've experienced seamless interoperability between an app (Facebook/Instagram) and a 3D space, and the 3D space is connected to others. This interoperability works across devices: from phone to glasses, from VR to computer screen. From one virtual space (or world) to another.

You've entered the Metaverse. And Instagram was a natural entry point.

Oh...and Facebook made bank on that initial ad. Or maybe they even helped the shoe company set-up their store.

Facebook doesn't need to own the shoe store in the Metaverse. Sure - maybe they rented some server space. But they might also just link across to a shoe store in Decentraland or Epicland.

They keep making money the way they always have.

But there's more.

Your Avatar Is You

I made the following speculation (click to see the discussion):

Ready Player Me lets you create an avatar. The avatar is 'interoperable'. Meaning, the avatar you create can enter a bunch of different 'worlds'.

No need to create a new one for every virtual space you enter. You reduce the friction which currently exists between being interested and participating: the set-up time has been reduced.

It's an ambitious and much-needed functionality as we head towards interconnected 3D games and virtual worlds.

Afterall, why do I need to keep signing up everytime I enter a new place? And why do I need to create a new avatar for every experience?

My speculation above, however, was in recognition that Facebook has one massive strategic value: it already has 2.89 billion user accounts.

Imagine if it could automatically generate an avatar for everyone of those 2.89 billion accounts? Maybe it scrapes their profile photos or something to create an avatar that looks like them right out of the box. No work needed - you just wake up one day and have one.

By eliminating the friction in creating an avatar identity, Facebook would suddenly have a massive avatar population ready to roam the Metaverse.

(By the way, Snapchat is on a similar trajectory. And so is Apple, although with less utility).

And Facebook would want these avatars to be interoperable. Because if Facebook can promise its users that their avatars can travel across the Metaverse then it is giving them an incentive.

Why would your aunt create an avatar using any other system? Facebook already gave her one.

And what about the virtual worlds themselves - all of those spaces which make up the Metaverse?

No brand is going to turn down access to an audience of 2.89 billion. And neither will the little shop keepers, the museums, the companies selling socks or your local council.

That's a lot of users. All of them with avatars. All of them ready to travel.

But...Anonymous!

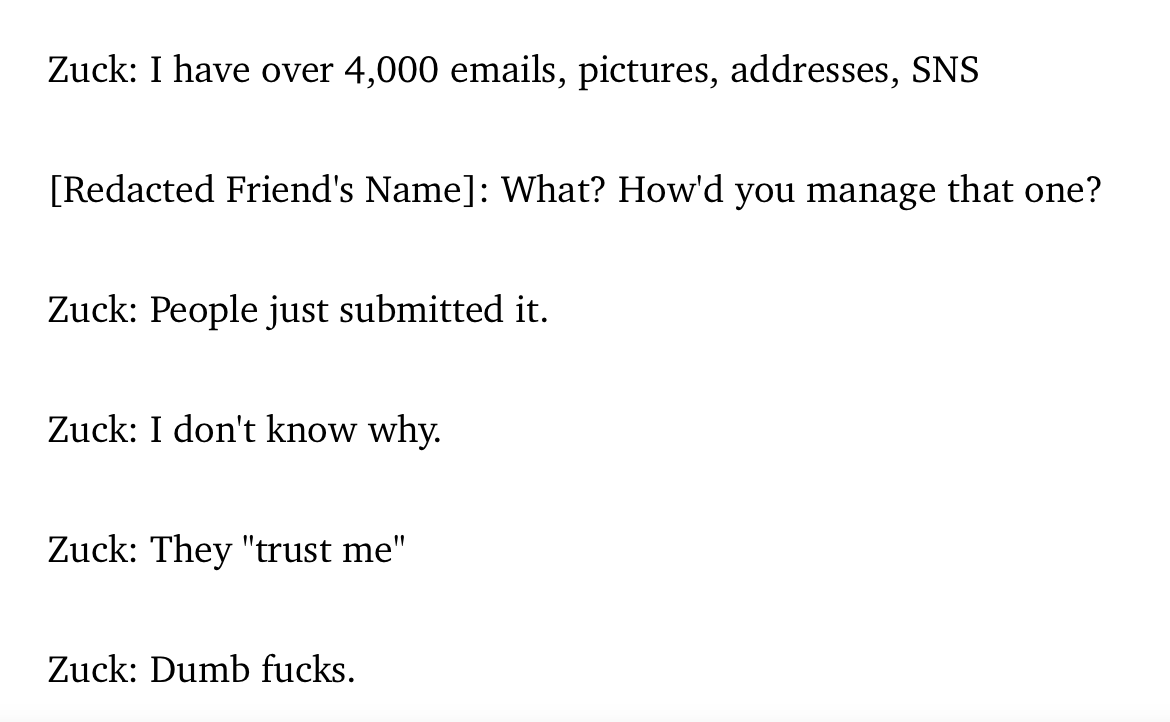

Now - will those avatars link back to the real names of the people behind them? Will your avatar have your credit card attached to it? Will you have a pop-up friends list so you can invite them along for your book club or whatever? Will your interests on Facebook help to recommend cool places for you to visit in the Metaverse?

Yes. And most people will be fine with that.

Let me repeat that: Yes. And most people will be fine with that.

Most people don't care about pseudoanonymity now. And most people won't. They aren't jumping into another world to play a fantasy game.

They just want to try on shoes.

Or maybe they want to hang out with their book club. Because suddenly every group on Facebook has a little icon that says "meet in 3D". And you don't need to set up an avatar, it's cheap (or free) to rent "space", and you really want to know that you're chatting with your Aunt Sally and not randomCatLover21237.

[By the way - I am not saying any of this because I necessarily support it. But I'm trying to recognize that pseudoanonymity is more of a concern within tech circles than out of them].

Facebook Doesn't Want to Build a Dystopia

(It just wants to make money helping you to get there).

Mark Zuckerberg announced that Facebook will become a Metaverse company within 5 years:

And my hope, if we do this well, I think over the next five years or so, in this next chapter of our company, I think we will effectively transition from people seeing us as primarily being a social media company to being a metaverse company.

Somehow, a consensus seemed to emerge that this somehow meant that Facebook wants to own the Metaverse. And a lot of people seem to equate this solely with Oculus or with this idea that Facebook has plans for some ad-infested dystopia.

But these ideas don't make either technical or strategic sense:

First, no one can OWN the Metaverse. Mark himself makes this point:

"The metaverse is a vision that spans many companies — the whole industry. You can think about it as the successor to the mobile internet. And it’s certainly not something that any one company is going to build, but I think a big part of our next chapter is going to hopefully be contributing to building that, in partnership with a lot of other companies and creators and developers."

Second, it isn't even technically possible for someone to own the full stack of the Metaverse.

We don't even need to argue over your personal definition of the word "Metaverse".

There are too many players, too many blockchain worlds, too many games, too many Epics and Niantics running around with their own Metaverse plans. All of these 3D spaces will link up because the technology already exists to make that happen.

The only limitation is a lack of agreement on standards.

(And yes, with today's tech, the connections would be flaky, we'll need 5G and edge computing etc etc. I didn't say today's Metaverse would be any good - just that the inventions are already mostly there, we're just searching for consensus on how to hook it all together).

Third, Mark said that Facebook will shift from being a social media company to a metaverse one, but he didn't say he's abandoning his business model. It doesn't make strategic sense for Facebook to throw out its business model, its reliance on its main apps, it's focus on advertising and monetizing attention.

In other words, Facebook is best served by extending what has worked for it so well before.

And finally, Mark himself said that this wasn't about virtual reality:

"But the metaverse isn’t just virtual reality. It’s going to be accessible across all of our different computing platforms; VR and AR, but also PC, and also mobile devices and game consoles. Speaking of which, a lot of people also think about the metaverse as primarily something that’s about gaming. And I think entertainment is clearly going to be a big part of it, but I don’t think that this is just gaming. I think that this is a persistent, synchronous environment where we can be together, which I think is probably going to resemble some kind of a hybrid between the social platforms that we see today, but an environment where you’re embodied in it."

But What About VR?

But doesn't this ignore Oculus and the work Facebook is doing on AR glasses?

Not really. But it's important to see those as components of, rather than key drivers of the Facebook Metaverse strategy.

I personally think that their investment in optical devices will end up being seen as a defensive move against an emerging generation of new 'wearables'.

Just like no one will own the Metaverse, no one will own the AR or VR markets. We might each end up owning (those of us with the means to do so) 3-4 pairs of glasses for different situations and experiences.

Facebook might end up being a market leader in VR. They may even win the battle with Apple, Niantic and a dozen other companies for AR.

But these will still be niche markets for at least a decade.

In the meantime, the Metaverse will be built, will grow, and will attract upwards of a billion or more users. And so at least for the next decade, the true strategic value for Facebook will be driven by its apps, and how they link to these new worlds.

Data In and Data Out

We think of Facebook as a walled garden. But it's not.

Facebook is everywhere. 17% of websites have a Facebook pixel. This pixel lets Facebook track you...even when you aren't on Facebook. 8.4 million websites send data back to Facebook.

And then there's social sign-in. 90% of people who use social login on sites other than Facebook use...Facebook.

In other words, a large part of Facebook's business model has always been collating data from outside its "main apps".

Why would the Metaverse be any different?

Facebook doesn't own those 8.4 million websites.

With the Metaverse, the ways that content will flow across digital domains will shift.

Scan Everything

I got to thinking about this after a lengthy chat with Keith Jordan, one of the smartest technologists around (in large part because his insights are grounded in, well, reality).

He outlined a scenario where Facebook could use photogrammetry to capture memories of an event and allow you to relive the experience in 3D.

Take a scan of the wedding cake, the bride on the steps of the church, and your drunk uncle passed out in the corner and bingo - you can now relive the special day in the Metaverse (or post the scans on your Facebook page).

Add scanning to Instagram and you suddenly have a new generation of photos. And in order to properly "see" a recreation of your wedding day - a virtual environment seems pretty ideal.

It's just another example of how Facebook will attempt to capture value as information and 3D content moves across domains.

And if Facebook can take a huge chunk of the gateways into the Metaverse, the avatars we use to travel when we get there, and the apps we post our Metaverse memories to...then don't they get a bigger win than selling a bunch more VR headsets?

Or maybe you think Facebook is planning to change its business model? If so, I'd suggest this deep dive by Napkin Math on "Is Facebook Fixable?". I concur with most of what it says. But leave it to you to make your own decisions.

A Metaverse Strategy

None of this is possible for Facebook without an open Metaverse. At the simplest level, the above scenarios aren't possible unless:

- There is a universal standard for linking to a 3D space. You need to be able to click that button in the shoe ad and have it take you to a shoe store

- There's a universal standard for allowing avatars to move seamlessly into a 3D world. If you need to sign-up for a new account everytime you click that "see in 3D" button, no one will come.

Now, I don't know what Facebook's real plans are. But the above speculation serves a few purposes:

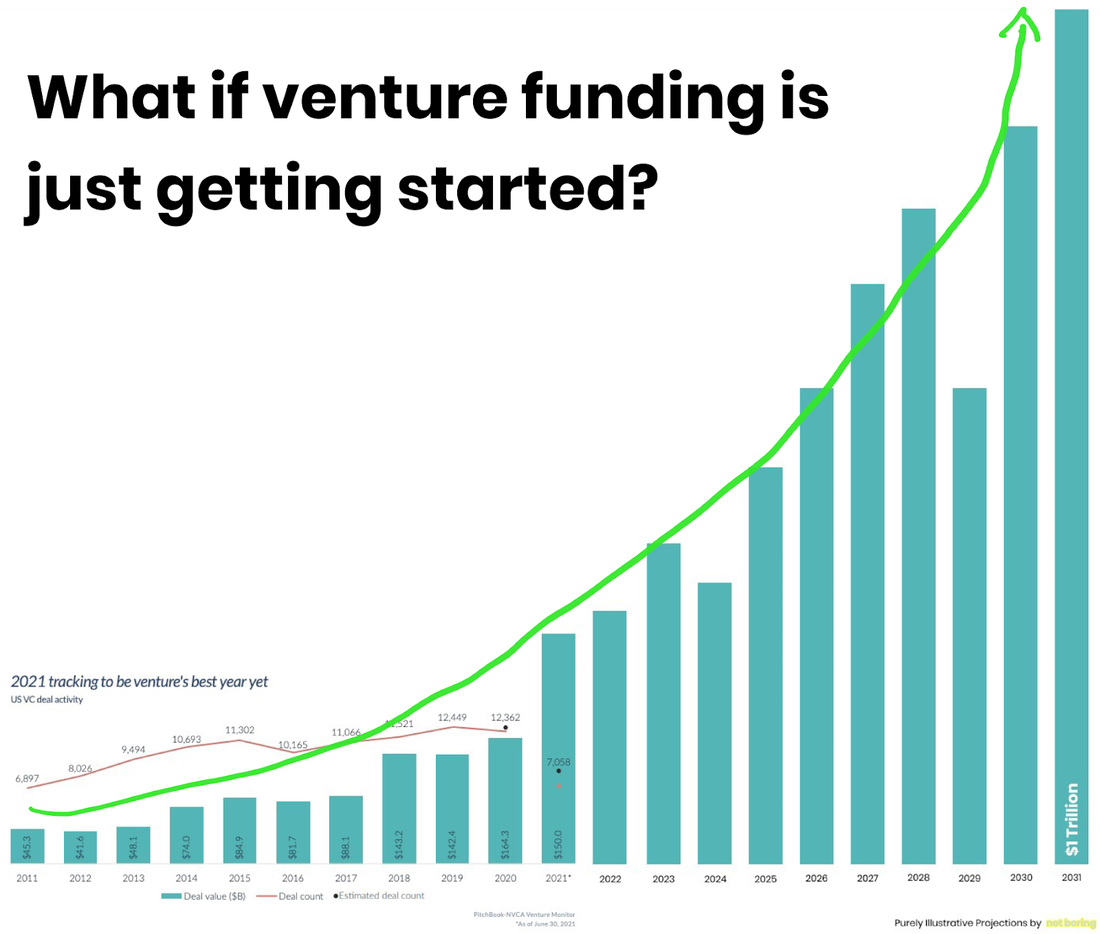

- It helps to visualize that the emergence of the Metaverse will be driven, in part, by the interests of extremely large and well-financed players.

- It shows how a company like Facebook can be supportive of an open Metaverse because it has the most to gain: it's one of the few companies in the world with a billion people who are 'avatar ready'

- It reminds us that the Metaverse may have huge sections which are more "real" than we might imagine. It will have book clubs or city council offices, scans of our pets or 3D photos of our wedding, endless stores with shoes or furniture.

- It demonstrates that while we might want pseudoanonymity or distributed blockchain identity systems - this will come up against the awesome power of Facebook and its 2.89 billion accounts. Most of whom don't care. And most of whom, frankly, just want to meet up with their best friend or uncle, and see them by their name.

- It helps us to understand that many of the entrypoints to the Metaverse might use "legacy" systems. As soon as we create a universal standard for virtual world URLs, Facebook can start to monetize the links. A shoe ad on Instagram is as likely a way to get into the Metaverse as any other source - and even a concert needs Facebook's insane reach if it wants more than 100 people to show up.

- And finally, it reminds us that the Metaverse is about experiences. No one cares whether the Metaverse starts or ends at their glasses. They care that they were able to hang out with their friends, or buy shoes, or see Ariana Grande - whether she appears on the floor of their living room, via a VR headset, or on a computer screen. You were synchronous with others. You were there. And the device you use to see her won't really matter to you the user.

Now, this is just one hypothesis for Facebook's Metaverse future. But even if it's the correct hypothesis there's still a wild card.

Because Facebook isn't the only player.

As much as Facebook and the other big players will be throwing billions at the strategic problem of ensuring their relevane in the Metaverse, they're still up against a powerful force: the people with a passion to building something better, who want to create something that leaves some of these old paradigms behind.

In other words, Facebook may be big and powerful, but the mighty have fallen before.

And they will fall again. Just don't expect them to go down without a fight.