Flaw in Right-Wing ‘Election Integrity’ App Exposes Voter-Suppression Plan and User Data

Meta has added another privacy sanction to its extensive collection: South Korea’s data protection agency fined the social media giant around $15.7 million for processing sensitive user data and passing it to advertisers without a proper legal basis, Reuters reports. Seoul’s Personal Information Protection Commission (PIPC) found Facebook’s parent collected information from about 980,000 users, […]

© 2024 TechCrunch. All rights reserved. For personal use only.

You likely have never heard of Babel Street or Location X, but chances are good that they know a lot about you and anyone else you know who keeps a phone nearby around the clock.

Reston, Virginia-located Babel Street is the little-known firm behind Location X, a service with the capability to track the locations of hundreds of millions of phone users over sustained periods of time. Ostensibly, Babel Street limits the use of the service to personnel and contractors of US government law enforcement agencies, including state entities. Despite the restriction, an individual working on behalf of a company that helps people remove their personal information from consumer data broker databases recently was able to obtain a two-week free trial by (truthfully) telling Babel Street he was considering performing contracting work for a government agency in the future.

KrebsOnSecurity, one of five news outlets that obtained access to the data produced during the trial, said that one capability of Location X is the ability to draw a line between two states or other locations—or a shape around a building, street block, or entire city—and see a historical record of Internet-connected devices that traversed those boundaries.

© Getty Images

Pavel Durov, the founder of the chat app Telegram, was arrested in late August in France on charges that the company hasn’t done enough to prevent malicious and illegal activity on the app.

One might be tempted to think that Telegram’s high level of data protection would prevent it from effectively addressing malicious activity on the platform: If Telegram can’t read their users’ messages, they can’t spot lawbreakers. Founded in 2013, Telegram has positioned itself as a privacy-focused, secure messaging platform that prioritizes user freedom and data protection. Durov has emphasized his strong commitment to privacy and free speech. In a tweet about the arrest, Durov wrote “Our experience is shaped by our mission to protect our users in authoritarian regimes.”

However, a closer look at the platform’s technology shows that privacy on Telegram is, at best, fragile.

First, while the Telegram’s client-side code was made open source, the server-side code was never opened to the public. This violates a widely embraced idea in cryptography known as Kerckhoffs’s principle, which states that everything in a cryptosystem should be public knowledge, except for the secret keys themselves.

Because the server code is closed source, there is no guarantee that Telegram does not just retain information forever.

While client code, which runs on users’ devices, is responsible for implementing private chats through end-to-end encryption, the server code, which runs on Telegram’s proprietary data centers, could do a lot of things that privacy-focused software is not supposed to do—for example, it can collect metadata, which includes statistics on user activities and geolocations, monitor and even eavesdrop on non-encrypted conversations, and report the information to third parties such as intelligence services or commercial corporations that could misuse it. Because the server code is closed source, there is no guarantee that Telegram does not just retain this information forever. If Telegram does, they could report that information when officially requested by someone, or even worse, provide an opportunity for hackers to leak it, even after you think you’ve deleted it.

Second, even Telegram’s approach to encryption on the client side is not optimal for privacy-focused software: Telegram’s communication is not encrypted end-to-end by default.

Most online communication these days is encrypted, which means that the text you send from your browser to some website is not going through the Internet as clear text, as cryptographers call it, but encrypted—typically by the encryption standard called Transport Layer Security (TLS). While there are benefits to TLS—it encrypts network messages to prevent listeners to the Internet traffic from eavesdropping on the data being transmitted—there is also a downside. The data is encrypted only when it is transmitted over Internet routers, but it is decrypted by intermediate servers—for example, by the Telegram servers. This means that Telegram can read and retain all your conversations.

Telegram inexplicably claims to be “way more secure” than WhatsApp, without offering any proof or reasonable justification.

Unlike TLS, end-to-end encryption ensures that the data is encrypted and decrypted using unique encryption keys that are known only to the sender and the recipient. For example, your chat message is encrypted inside your device, a mobile phone or laptop, and sent in its encrypted form through all the servers, including Telegram’s servers, and decrypted only at the other end—inside the recipient’s device.

End-to-end encryption by default would guarantee that Telegram cannot read your messages under any circumstances. In the case of end-to-end encryption, even the fact that the server source code remains proprietary should not affect the security of the encryption because the servers don’t know the encryption keys.

Yet because Telegram’s end-to-end encryption is not enabled by default, many users may overlook this fact, leaving their communications vulnerable to interception or eavesdropping by Telegram personnel, intelligence services, or hackers. In contrast, another popular messaging service, WhatsApp, not only has end-to-end encryption enabled by default but also extends it to group chats—something Telegram lacks entirely. Despite this crucial difference, Telegram inexplicably claims to be “way more secure” than WhatsApp, without offering any proof or reasonable justification.

It is also important to note that even end-to-end encryption does not prevent Telegram from collecting metadata, meaning that even though the text of your messages cannot be read, one can still see when you sent the message and who the recipient is.

Since the server code is not open source, we don’t know how Telegram manages metadata. Even with end-to-end encryption protecting the content of messages, metadata such as the time, geolocation, and identities of users can still be collected and analyzed, revealing patterns and relationships. This means that metadata can compromise privacy by exposing who is communicating, when, and where—even if the messages themselves remain encrypted and unreadable to outsiders.

Third, for both end-to-end encrypted and standard chats, Telegram uses a proprietary protocol, called MTProto. Because MTProto is proprietary, the full implementation is not publicly available for scrutiny. Proprietary protocols may contain undisclosed vulnerabilities. MTProto has not undergone comprehensive independent security audits comparable to those performed on open-source protocols like the Signal Protocol (which WhatsApp also uses). So, even for so-called secret chats, there is no guarantee that the implementation is secure.

These technical shortcomings have real-life consequences.

Freedom of speech and privacy are fundamental human rights, but we should be careful about how we use the tools that promise to preserve them.

Telegram was blocked in Russia in April 2018 after the company refused to comply with a court order to provide Russian authorities with access to encryption keys, which would have allowed them to decrypt user messages. Despite the ban, Telegram remained accessible to many users in Russia through the use of VPNs and other circumvention tools. In June 2020, Russian authorities suddenly lifted the ban on Telegram. Russia stated that the decision was made in light of Telegram’s willingness to assist in the fight against terrorism by blocking certain channels associated with terrorist activities, although Telegram continued to maintain its stance on user privacy.

But in 2023, Russian opposition activists reported that their messages, although sent through secret chats, had been monitored and read by special forces, which led to their arrests. Telegram suggested that Russian authorities could have gotten access to the chats through a phone-hacking tool like Cellebrite, but the holes in Telegram’s security make it impossible to know for sure.

The struggle between privacy and governmental control is ongoing, and the balance between safeguarding human rights and national security remains a contentious issue. Freedom of speech and privacy are fundamental human rights, but we should be careful about how we use the tools that promise to preserve them. Signal and WhatsApp, unlike Telegram, both have end-to-end encryption enabled by default. In addition, Signal open-sources both the client- and server-side code. This allows security researchers to review the code and confirm that the software is secure and does not conduct surveillance on its users. A full open-source approach would also ensure that private chats are designed in such a way that they cannot be compromised.

Telegram does not offer significantly better privacy or security than average communication services, like Facebook Messenger. When it comes to the niche of truly privacy-centric products—where Telegram is trying hard to position itself—it’s doubtful that Telegram can compete with Signal or even WhatsApp. While even those two aren’t perfect in terms of privacy, they both have a leg up on that self-professed privacy stronghold Telegram.

Cloud Monitor by ManagedMethods is a cloud security solution specifically tailored for technology teams working in the education market. As schools strive to create safe and enriching digital learning environments for students and educators alike, Cloud Monitor stands as the vanguard of cloud security, ensuring data protection and privacy in the ever-evolving edtech space.

Cloud Monitor by ManagedMethods is a cloud security solution specifically tailored for technology teams working in the education market. As schools strive to create safe and enriching digital learning environments for students and educators alike, Cloud Monitor stands as the vanguard of cloud security, ensuring data protection and privacy in the ever-evolving edtech space.

At the forefront of innovation, Cloud Monitor harnesses the power of AI-driven technology to provide visibility and control into cloud applications, thereby detecting and thwarting potential security threats. As education embraces the cloud to foster collaborative and flexible learning experiences, safeguarding sensitive student and financial data is critical. Cloud Monitor empowers districts to fulfill their duty of care, securing data while fostering a climate of trust among students, educators, and parents.

Implementing Cloud Monitor is easy, thanks to its user-friendly design and seamless integration with Google Workspace and Microsoft 365, it requires minimal training. It’s automated alerts and remediation capabilities enables swift action against any potential breaches, malware attacks, or student safety risks, freeing district technology teams to focus on the million other things they have on their to-do list.

With the ever-evolving data privacy landscape, adhering to industry standards and regulations such as COPPA, FERPA, and a variety of state-level regulations is a must. Cloud Monitor assists districts in maintaining compliance, monitoring data, and detecting policy violations.

Cloud Monitor by ManagedMethods is the ultimate guardian of cloud security and safety for K-12 schools, empowering districts to create safe, secure, and compliant cloud learning environments. For these reasons and more, Cloud Monitor by ManagedMethods is a Cool Tool Award Winner for “Best Security (Cybersecurity, Student safety) Solution” as part of The EdTech Awards 2024 from EdTech Digest. Learn more.

The post Cloud Monitor by ManagedMethods appeared first on EdTech Digest.

When Los Angeles Unified School District launched a districtwide AI chatbot nicknamed “Ed” in March, officials boasted that it represented a revolutionary new tool that was only possible thanks to generative AI — a personal assistant that could point each student to tailored resources and assignments and playfully nudge and encourage them to keep going.

But last month, just a few months after the fanfare of the public launch event, the district abruptly shut down its Ed chatbot, after the company it contracted to build the system, AllHere Education, suddenly furloughed most of its staff citing financial difficulties. The company had raised more than $12 million in venture capital, and its five-year contract with the LA district was for about $6 million over five years, about half of which the company had already been paid.

It’s not yet clear what happened: LAUSD officials declined interview requests from EdSurge, and officials from AllHere did not respond to requests for comment about the company’s future. A statement issued by the school district said “several educational technology companies are interested in acquiring” AllHere to continue its work, though nothing concrete has been announced.

A tech leader for the school district, which is the nation’s second-largest, told the Los Angeles Times that some information in the Ed system is still available to students and families, just not in chatbot form. But it was the chatbot that was touted as the key innovation — which relied on human moderators at AllHere to monitor some of the chatbot’s output who are no longer actively working on the project.

Some edtech experts contacted by EdSurge say that the implosion of the cutting-edge AI tool offers lessons for other schools and colleges working to make use of generative AI. Most of those lessons, they say, center on a factor that is more difficult than many people realize: the challenges of corralling and safeguarding data.

When leaders from AllHere gave EdSurge a demo of the Ed chatbot in March, back when the company seemed thriving and had recently been named to a Time magazine list of the “World’s Top Edtech Companies of 2024,” company leaders were most proud of how the chatbot cut across dozens of tech tools that the school system uses.

“The first job of Ed was, how do you create one unified learning space that brings together all the digital tools, and that eliminates the high number of clicks that otherwise the student would need to navigate through them all?” the company’s then-CEO, Joanna Smith-Griffin, said at the time. (The LAUSD statement said she is no longer with the company.)

Such data integration had not previously been a focus of the company, though. The company’s main expertise was making chatbots that were “designed to mimic real conversations, responding with empathy or humor depending on the student's needs in the moment on an individual level,” according to its website.

Michael Feldstein, a longtime edtech consultant, said that from the first time he heard about the Ed chatbot, he saw the project as too ambitious for a small startup to tackle.

“In order to do the kind of work that they were promising, they needed to gather information about students from many IT systems,” he said. “This is the well-known hard part of edtech.”

Feldstein guesses that to make a chatbot that could seamlessly take data from nearly every critical learning resource at a school, as announced at the splashy press conference in March, it could take 10 times the amount AllHere was being paid.

“There’s no evidence that they had experience as system integrators,” he said of AllHere. “It’s not clear that they had the expertise.”

In fact, a former engineer from AllHere reportedly sent emails to leaders in the school district warning that the company was not handling student data according to best practices of privacy protection, according to an article in The 74, the publication that first reported the implosion of AllHere. The official, Chris Whiteley, reportedly told state and district officials that the way the Ed chatbot handled student records put the data at risk of getting hacked. (The school district’s statement defends its privacy practices, saying that: “Throughout the development of the Ed platform, Los Angeles Unified has closely reviewed the platform to ensure compliance with applicable privacy laws and regulations, as well as Los Angeles Unified’s own data security and privacy policies, and AllHere is contractually obligated to do the same.”)

LAUSD’s data systems have recently faced breaches that appear unrelated to the Ed chatbot project. Last month hackers claimed to be selling troves of millions of records from LAUSD on the dark web for $1,000. And a data breach of a data warehouse provider used by LAUSD, Snowflake, claims to have snatched records of millions of students, including from the district. A more recent breach of Snowflake may have affected LAUSD or other tech companies it works with as well.

“LAUSD maintains an enormous amount of sensitive data. A breach of an integrated data system of LAUSD could affect a staggering number of individuals,” said Doug Levin, co-founder and national director of the K12 Security Information eXchange, in an email interview. He said he is waiting for the district to share more information about what happened. “I am mostly interested in understanding whether any of LAUSD’s edtech vendors were breached and — if so — if other customers of those vendors are at risk,” he said. “This would make it a national issue.”

Meanwhile, what happens to all the student data in the Ed chatbot?

According to the statement released by LAUSD: “Any student data belonging to the District and residing in the Ed platform will continue to be subject to the same privacy and data security protections, regardless of what happens to AllHere as a company.”

A copy of the contract between AllHere and LAUSD, obtained by EdSurge under a public records request, does indicate that all data from the project “will remain the exclusive property of LAUSD.” And the contract contains a provision stating that AllHere “shall delete a student’s covered information upon request of the district.”

Related document: Contract between LAUSD and AllHere Education.Rob Nelson, executive director for academic technology and planning at the University of Pennsylvania, said the situation does create fresh risks, though.

“Are they taking appropriate technical steps to make sure that data is secure and there won’t be a breach or something intentional by an employee?” Nelson wondered.

James Wiley, a vice president at the education market research firm ListEdTech, said he would have advised AllHere to seek a partner with experience wrangling and managing data.

When he saw a copy of the contract between the school district and AllHere, he said his reaction was, “Why did you sign up for this?,” adding that “some of the data you would need to do this chatbot isn’t even called out in the contract.”

Wiley said that school officials may not have understood how hard it was to do the kind of data integration they were asking for. “I think a lot of times schools and colleges don’t understand how complex their data structure is,” he added. “And you’re assuming a vendor is going to come in and say, ‘It’s here and here.’” But he said it is never that simple.

“Building the Holy Grail of a data-informed, personalized achievement tool is a big job,” he added. “It’s a noble cause, but you have to realize what you have to do to get there.”

For him, the biggest lesson for other schools and colleges is to take a hard look at their data systems before launching a big AI project.

“It’s a cautionary tale,” he concluded. “AI is not going to be a silver bullet here. You’re still going to have to get your house in order before you bring AI in.”

To Nelson, of the University of Pennsylvania, the larger lesson in this unfolding saga is that it’s too soon in the development of generative AI tools to scale up one idea to a whole school district or college campus.

Instead of one multimillion-dollar bet, he said, “let’s invest $10,000 in five projects that are teacher-based, and then listen to what the teachers have to say about it and learn what these tools are going to do well.”

© Thomas Bethge / Shutterstock

Naaktbeelden of ander expliciet materiaal delen met iemand waarmee je online date of chat – 12% van de Nederlanders geeft toe dit wel eens te doen. Dat blijkt uit onderzoek in opdracht van beveiligingsbedrijf Kaspersky onder 504 Nederlandse consumenten. Dat terwijl 80% van de respondenten zich wel bewust is van de veiligheidsrisico’s die daarmee gepaard gaan.

“Het delen van intieme beelden met een geliefde is al jaren een gangbare praktijk. Er zijn echter aanzienlijke risico’s aan verbonden. Denk bijvoorbeeld aan de ‘Fappening’ campagne, waarbij intieme foto’s van beroemdheden werden gelekt en via het internet verspreid”, zegt Jornt van der Wiel, Senior Security Researcher bij Kaspersky’s Global Research and Analysis Team.

Ook onder ‘gewone’ mensen komt bijvoorbeeld sextortion – waarbij dergelijke beelden gebruikt worden om iemand uit te buiten – steeds vaker voor. Dat geldt ook voor wraakporno, waarbij privéfoto’s of -video’s van seksuele aard die eerder vaak in een vertrouwensrelatie werden gedeeld nu zonder toestemming met anderen worden gedeeld of verspreid.

Toch deelt 12% van de respondenten dus wel eens naaktbeelden of andere expliciete content. 16% heeft zelf wel eens dergelijk materiaal van een ander ontvangen. Maar slechts 27% gebruikt volgens het onderzoek veilige methoden bij het online delen van gevoelige of persoonlijke inhoud. Zo werkt 31% van de respondenten zijn privacy-instellingen niet regelmatig bij of weet niet hoe dat moet en 35% bekijkt niet het beveiligingsbeleid van platformen of weet niet van het bestaan van zo’n beleid af. 56% is zich daarnaast niet bewust van of weet niet hoe inhoud die zonder hun toestemming is gedeeld moeten herkennen of melden.

“We raden iedereen die overweegt om intieme foto’s te delen aan om goed na te denken over de mogelijke gevolgen en de impact op hun leven als deze foto’s openbaar worden. Als men bereid is om deze risico’s te accepteren, dan is het cruciaal om de beelden op een veilige manier te delen”, aldus Van der Wiel. Mocht de privacy toch geschonden zijn, dan kun je naar de politie stappen. Maar slechts 35% van de respondenten heeft vertrouwen in de politie vals het gaat om het vervolgen van digitale privacyschendingen.

Lees Ruim 1 op de 10 Nederlanders deelt digitaal naaktbeelden en expliciet materiaal verder op Numrush

Hoe verder we digitaliseren, hoe meer data we online hebben staan. Vorig jaar zei 89% van de Nederlanders van 12 jaar of ouder maatregelen te treffen om die data te beschermen. Zij nemen diverse soorten stappen voor databescherming, zoals het beperken van toegang tot profielgegevens op sociale media en de veiligheid van websites controleren.

Het aantal mensen in de Nederlandse bevolking dat maatregelen neemt om zijn online data te beschermen, is vorig jaar iets gestegen. Waar dat in 2023 89% was, was dat in 2021 nog 82%, blijkt uit cijfers van het CBS. Nederlanders beperken nu vooral vaker toegang tot profielgegevens: 70,3% doet dat nu, tegenover 54,8% in 2021.

De meest genomen maatregel is echter nog altijd het beperken van toegang tot locatiegegevens, wat 77,9% nu doet. De minst genomen maatregel is het laten verwijderen van gegevens: slechts 14,2% van de Nederlanders deed dat in 2023.

Bron: CBS

De cijfers van het CBS laten flinke verschillen tussen leeftijdscategorieën zien wat betreft databescherming. Van de groep Nederlanders tussen de 12 en 65 jaar is 93% actief bezig met het beschermen van zijn persoonlijke informatie. Zeker de groep tussen de 18 en 25 jaar doet veel aan databescherming. In die groep gaat het om maar liefst 95,8%.

Onder ouderen is echter een heel ander beeld zichtbaar. Van de Nederlanders tussen de 65 en 75 jaar is 84,8% actief bezig met databescherming. Onder 75-plussers is dat zelfs maar 60%. Maar dit kan natuurlijk ook te maken hebben met dat ouderen überhaupt minder vaak op het internet zitten dan jongeren en daar dus ook minder persoonlijke data hebben staan.

Over het algemeen genomen is Nederland wel koploper in de beveiliging van persoonsgegevens op het internet. Gemiddeld neemt slechts 67% van de Europeanen maatregelen om zin data te beschermen. In Nederland heeft tegelijkertijd ook het grootste aantal mensen toegang tot het internet in Europa, namelijk 81%.

Lees 9 op de 10 Nederlanders neemt stappen om persoonlijke data op internet te beschermen verder op Numrush

The myth has it that the earth is held up by a World Turtle. When asked what holds up the World Turtle, the sage replies: "another turtle".

You know the rest. It's turtles all the way down.

Depending what media you read, you might have heard we're living in an Exponential Age. The pace of change is so fast, and is happening along a curve that's so, well, exponential that it's nearly impossible for the human mind to comprehend.

It's a curve that doesn't just encompass finance or computers, but also climate change and research, genetics and nuclear fusion.

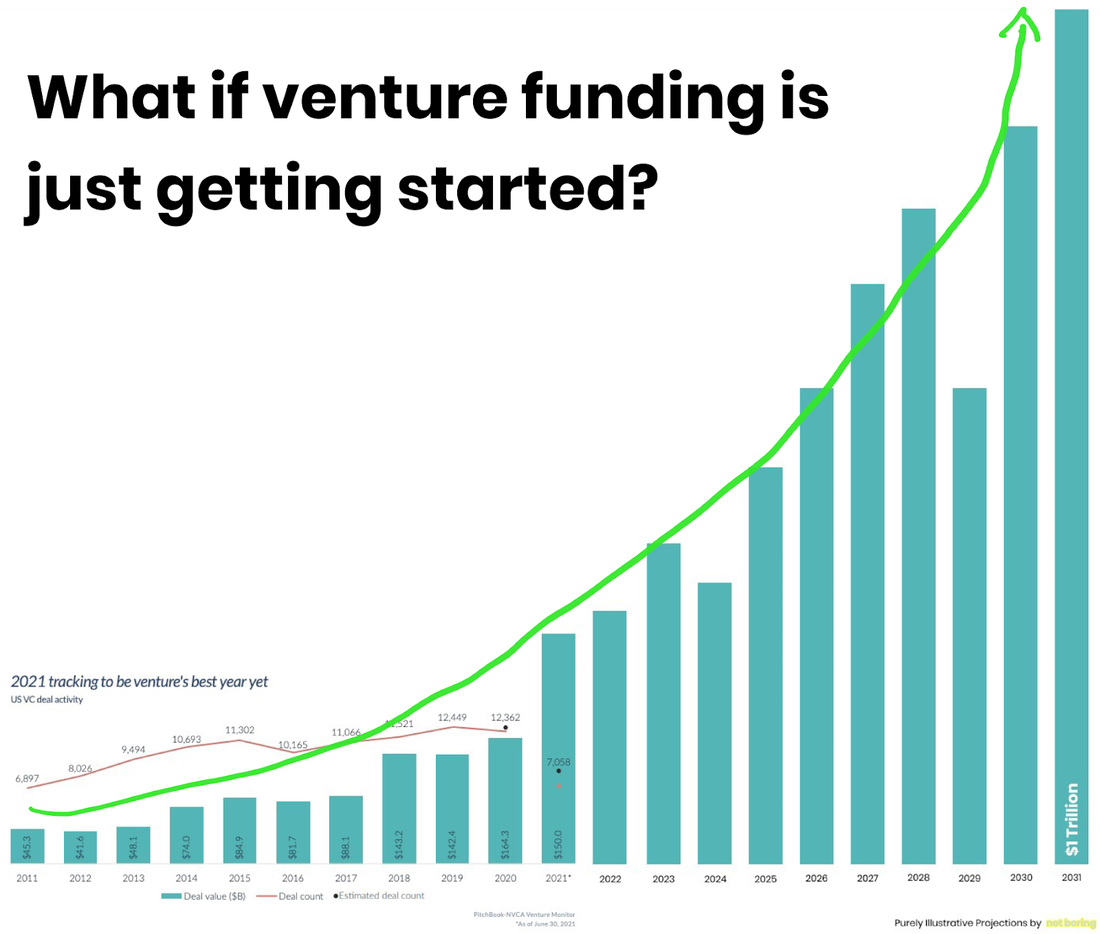

The concept of this age led Packy McCormack to proclaim we might be headed to a Trillion dollar VC future:

Lately, predictions about the Metaverse seem to be rapidly scaling those Exponential peaks. This week Goldman Sachs called it an $8 TRILLION dollar opportunity.

Maybe it's better to create a bar graph of how VCs and analysts value the Metaverse. It would be very Exponential. And it would probably end with Jensen Huang's prediction that “Omniverse or the Metaverse is going to be a new economy this is larger than our current economy.”

(Does that mean our current economy will shrink to zero? Or that it will double? Because isn't the Metaverse also part of "our economy"?)

Regardless - you get the point. The Exponential Age is upon us.

I can feel it.

I can't keep up.

My feed is filled with new AI advances and more realistic virtual worlds, with virtual productions that are almost as good as what Hollywood can produce and robots whose facial expressions look a lot like people.

Oh, and a planet increasingly following an exponential trajectory of its own. And a virus that has taught us all to understand the value of a logarithmic chart.

Maybe you can feel it too? This sense that things are happening so fast, that change is sweeping by us like brush fire, and that we barely have time to recognize it let alone run to keep up.

Turtles might be a by-product of the Exponential Age.

They're everywhere.

How you feel about AI stands on the back of your ideas about intelligence which stand on the back of your ideas about the human mind.

These days even the Turing Test stands on a different turtle than we may have imagined. Alvy Ray Smith argues that Turing set the test out as a commentary on a society that tries to evaluate the mind of a gay mind. The Turing Test was a subversive way of asking: "how can you chemically try to castrate me? You can't even tell if it's a real human behind that curtain."

Not the turtle I thought his test was standing on.

But there are lots of other turtles.

The Metaverse is full of them.

How you feel about avatars stands on the back of your ideas about our capacity to identify outside of our own bodies, which stands on the back of our ideas about the importance of the physical world, which stands on the back of our ideas of humanity's place in that world.

If you want to go down THAT rabbit hole, the Convivial Society is there to guide you through a lot of Hannah Arendt and Marxist-adjacent commentary. And honestly? I can buy all of it on certain days. And on others, I can see it as an author in need of a good anthropologist.

Too much philosophy and not enough doing is one of the turtles we can stand on. But its shell is fragile.

At one point, I wrote that I thought avatar identity and virtuality was a sort of proxy affirmation Gödel's Incompleteness Theorem: no matter how deep we go in trying to find the real 'self', we'll always loop back to where we started.

I am what I am and that includes my avatar. There's no point in finding the final turtle, because it's mathematically impossible to prove that there's one in the first place.

(As a side note, Incompleteness Theorem has deep relevance to the development of the computer - and is where Turing started in the first place. This creates yet another strange loop where the thing that computers tried to solve ended up creating worlds where their solution was made, well, more 'meta' than we imagined).

The Exponential Age makes it tough to keep up. Meta, Microsoft, Apple, NVIDIA, Niantic...everyone is piling into the Metaverse.

Sure, maybe you're steeped in this stuff like I am. But most of you aren't. You have day jobs and a dog to walk and you really want to have pizza tonight even though you know you shouldn't.

So how do you decode it? How do you figure out which "metaverse" you want to join? How do you gauge how much fear you should have, or how concerned you should be that we're all about to log-out of reality?

Well...the turtles are here to help. Or more precisely, three of them:

And it goes like this: first, answer those questions for yourself, even if only in a loose way. Second, listen for how people talk about the Metaverse in relation to those three things. Third, compare the two.

What It Means to be Human

Let's start with Meta née Facebook.

To Meta, being human means being connected. In their keynote about their move into the Metaverse it wasn't about finding clean, empty, silent spaces online. It was about connecting. Because to them, that's the human purpose.

Having said that, what they SAY about people and how they act often diverge. Something that goes all the way back to this quote:

Listen for those signals. Whether it's the belief that being human is about work (Microsoft) or play (Niantic) these companies build entire businesses around a singular view of what it means to be human.

How Should Humans Relate to Each Other?

I had an interesting chat on Twitter about decentralized autonomous organizations (DAOs). And it sort of concluded with this:

Now, first, I don't really take any great exception to what Bruce had to say. It was sane, cautious, and skeptical. All good - because there's a LOT to be skeptical about when it comes to some of these new crypto-based models.

But my second thought was: "hold on...so, you're OK with corporations as a structure because they achieve the same outcomes?"

I think that how humans relate to each other is a central question for our times. I've personally come to believe that the "corporate" experiment has run its course.

Whatever this system is we live in is fundamentally broken. We need something better - and I'm willing to throw the dice a bit to see if we can't find it.

But that's me. That's my turtle.

I respect Bruce's turtle also. For him, a corporation is an outcome producing entity and a DAO is a speculative crap shoot.

That's his turtle and I have my own.

But how companies and communities decide this will have a radical impact on how the Metaverse evolves. If we're all satisfied with letting corporations decide our shared future - that's a choice.

Hanging on to old ideas about the efficiency of markets or the glorious power of the corporation (a legal PERSON in some countries) is also a choice. When you hear companies talk up the virtues of the Metaverse, listen carefully to the language they choose around how we'll all interact together.

Do any of them predict their own obsolescence? Or do they continue to act as if the weather is perfectly fine under their corporate umbrellas?

I'm kind of hoping we can come up with something new.

How do you describe humanity's relationship to its tools and technology?

This is the final turtle. And I'll keep it simple:

My own turtle? Technology is a conscious choice made by imperfect people.

Do your own research.

Do you see what I did here?

I created another strange loop.

Because here's the thing: the Metaverse will be massive. It will contain questions that stand on assumptions that stand on questions. It will challenge us to rethink how society is organized, the role of the corporation, the place of waking dreams and the dependence we can create on imaginary places.

As you enter this strange new world, listen carefully to the carnival barkers and corporate shills, the crypto enthusiasts and the coders.

If you listen carefully to how they talk - about people, their relationship to each other, and how they treat their 'tools' then you can pretty quickly close a lot of doors that should remain shut.

But when you do, you might find yourself questioning whether your own beliefs about these same topics still hold true.

You might start wondering what it means to be human when our capacity for self-expression is no longer shackled to reality, or how we will organize ourselves when corporations aren't the only game in town and when governance and culture happen "on-chain".

And so the strange loop: as we try to understand the Metaverse, we loop back to a deeper understanding of ourself.

There's a quote I love which was perhaps optimistic for its time. And maybe it's optimistic now also.

When asked about his trip to the moon, Neil Armstrong replied:

"We hope and think that those people shared our belief that this is the beginning of a new era—the beginning of an era when man understands the universe around him, and the beginning of the era when man understands himself."

May you ride most excellent turtles on that journey to understanding.

So...I'd really like to hear from you. If you get this by e-mail, please do reply. I love it when people hit reply.

You can also hit me up on Twitter. I like having chats in the public square when I can.

I also recommend you join the Open Meta Discord (if Discord is your thing).

And if you want something REALLY fun, join me as I explore something that I've been spending a lot of time on. Like, decades. :)

Your avatar may become central to how you spend time online. Instead of browsing websites, we'll increasingly move through spatial environments: whether virtual worlds or a digital overlay on top of reality; whether via VR goggles, AR glasses, or through a 3D web browser.

The concept of the Metaverse, at one of the simplest levels, is the ability to move with relative ease between these digital spaces.

Instead of logging out of Fortnite and logging into Minecraft, we'll just teleport, fly or walk (digitally) between two different parts of the Metaverse.

Let's set aside for the moment that you might need to do a quick costume change when you DO move between two differently-themed areas in the Metaverse.

(How the visual look of your avatar will be handled as more and more virtual worlds connect will be an intriguing challenge. I find it hard to believe that there will be a single "look" for avatars).

Instead, take a minute to understand how profound it is that you're INSIDE the places you visit.

On a website you're mostly invisible (except for, say, an online status indicator).

In the Metaverse, by definition, you will have presence. Other people will see you. You will become a participant in whatever virtual space you land in even if you do nothing more than stand there.

(As a side note, this presence may not always look like a game character. I argue that your car will be an avatar as you drive around town).

The spaces you travel through will include games, social spaces (clubs and concert halls, for example), worlds for education and entire continents filled with licensed/branded content.

In the Metaverse, you aren't just an invisible "user" like you are on the web (tracked by cookies and ad trackers, but a 'user' nonetheless). Your avatar is an agent, a person, an embodiment of YOU. And your presence is an act of participation.

Discord may be the closest thing we have right now to a Metaverse. Although it's privately owned, it embodies a few general principles:

Discord has done a really great job at creating a hierarchy of permissions. Each "world administrator" can set granilar controls for channels within a server. They can use plug-ins to set up leaderboards. They can set roles for different groups of people.

Now think about how this would be extended into 3D space.

Because one day, you'll be able to set up your own 'world server' with the same ease as you can on Discord. And people will be able to travel just as seamlessly between servers - bringing part of their identity with them, their wallets, the stickers they have rights to, their Nitros.

But there will now be some other forces at play:

To start, you'll probably end up on one of the massive world "continents" being dreamed up by Epic Games or Niantic, or inside a corporate world hosted by Microsoft and viewed through a Hololens.

But at some point, these continents (or Metagalaxies) will become increasingly connected. When they DO, that's the Metaverse.

And you'll move through worlds like you've signed up for 100 Discord servers in an afternoon.

Which means you may face 100 walls of text: each one outlining its own rules, privacy policy and terms of service.

Fourteen years ago, I read an interview conducted by Tish Shute (joined by David Levine, a researcher from UBM) with Eben Moglen, founder of the Software Freedom Law Center.

It had a profound impact. Because it challenged my traditional notion of where responsibility should lie when it comes to permissions.

In it, Eben put forth the concept of the clean well-lit room (lightly edited):

I think what we really want to say is something like this. If you are talking about a public space you’re talking about a thing that has not just a TOS contract but a social contract.

It’s a thing which has to do with what you get and what you give up in order to be there.

There ought to be two rules. One: Avatars ought to exist independent of any individual social contract put forward by any particular space. And two: social contracts ought to be available in a machine readable form which allows the avatar projection intelligence to know exactly what the rules are and to allow you set effective guidelines. I don’t go to spaces where people don’t treat me in ways that I consider to be crucial in my treatment.

Its one thing to say that the code is open source – let’s even say free software – it is another thing to say that that code has to behave in certain ways and it has to maintain certain rules of social integrity.

It has got to tell you what the rules are of the space where you are. It has to give you an opportunity to make an informed consent about what is going to happen given those rules. It has got to give you an opportunity to know those things in an automatic sort of way so I can set up my avatar to say, you know what, I don’t go to places where I am on video camera all the time. Self, if you are about to walk into a room where there are video cameras on all the time just don’t walk through that door. So I don’t have to sign up and click yes on 27 agreements, I have got an avatar that doesn’t go into places that aren’t clean and well lit.

Or, put it another way:

Your avatar is your embodiment in the Metaverse. Your avatar will 'carry' around a wallet, an inventory and a (hopefully) pseudoanonymous identity. But it can also carry around a contract. This machine-readable piece of data can be used to "check-in" with virtual spaces and conclude: "No, this space has violence, and your avatar carries around metadata saying you don't want to enter violent spaces".

A lot of years have passed since Eben's concept.

Today, I would revise this slightly:

Your avatar can carry around its terms and conditions. Instead of the responsibility lying with US to agree to the terms and conditions of virtual spaces, the onus should be on the SERVERS. I want servers...I want the spaces in the Metaverse, to agree to MY terms and conditions, and not the other way around.

And so my avatar does a handshake before entering a virtual space in the Metaverse. The space itself either agrees to my terms and conditions OR presents a counter-offer.

Imagine you have set your "permissions" to exclude violence, eye gaze tracking, or access to personally identifiable data. The server can counter-offer: "OK, but I need your name in order to let you participate in this educational event".

You can agree (or not). But your agreement happens in a very clear and granular way. You don't need to read a wall of text because the server was obliged to read YOURS and to only highlight the exclusions.

And this all becomes possible with the blockchain - a public ledger of these brief contracts between ourselves and the virtual worlds we visit.

Theo Priestley recently asked whether Tim Berners-Lee new privacy initiative could be adapted for the Metaverse.

Which is another way to say: one the main inventors of the web got it wrong in the first place.

As we move towards interoperability between virtual worlds, and the Metaverse becomes manifest, we don't need to port over all of our old assumptions.

What's perhaps most exciting about NFTs and blockchain is the underpinning value of decentralization and methods for trust. (There are downsides to all of this, but I'll leave that for now).

If, however, we stil 'centralize' permissions, even at the micro-level of an individual server (much like how a Discord server can have its own community standards), we'll find ourselves clicking "I AGREE" a lot....again.

Terms of Service, community standards, privacy policies - they will all still be OUR responsibility to read. And let's face it: we don't.

Maybe it's time to flip the script.

Our avatars can be more than social signals. They can contain our list of demands, and it will be up to the servers to meet our demands, instead of the other way around.